Part Three of Value-Delivery in the

Rise-and-Decline of General Electric

Illusions of Destiny Controlled & the Word’s Real Losses

By Michael J. Lanning––Copyright © 2022-23 All Rights Reserved

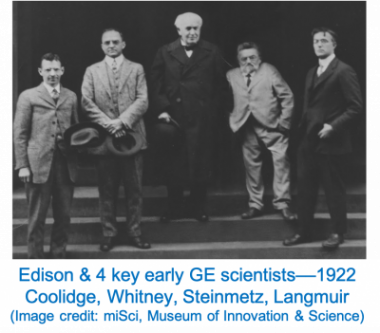

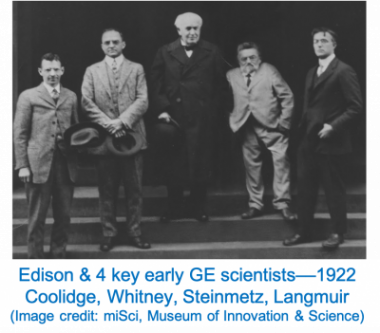

This is Part Three of our four-part series on GE’s great rise and eventual dramatic decline, seen through our value-delivery lens. Businesses as much as ever need strategies that deliver superior value to customers, including via major product-innovation. GE’s lengthy history offers striking lessons on this key challenge. Previous posts in this series provided an Introductory Overview, then Part One on GE’s first century (1878-1980), followed by Part Two on the Jack Welch era (1981-2010). Now, this Part Three reviews GE’s period of Digital Delusions (2011-2018). (Note from the author: special thanks to colleagues Helmut Meixner and Jim Tyrone for tremendously valuable help on this series.)

In 2011, in the wake of the global financial crisis, GE launched a “digital transformation,” a much praised initiative aiming for major new business and revenues from software. The company had presciently foreseen an explosive growth in the “Industrial Internet,” the installation and connection of digital technology with industrial equipment. In GE’s vision, this phenomenon would result in massive data about equipment-performance and would enable the company to establish a powerful software-platform as the “Operating System” for the Industrial Internet. GE therefore expected the initiative to pay off handsomely.

However, GE overestimated three factors: the potential benefit to industrial customers from applying this resulting data; the willingness of customers to share that data with GE; and the ability of GE to establish a viable software platform. As a result, this initiative produced very disappointing results. Even more important, it ignored the fundamental goal that should have been GE’s top priority after 2011: reestablishing its strategy––as prior to Jack Welch in 1981––for creating large, new, innovation-based product-businesses. Thus, the period of this transformation, 2011-2018, proved mostly one of Digital Delusions.

* * *

By 2011, GE had gone through two major strategic eras since its 1878 founding, and now needed new direction. In the first of these eras––GE’s first century, through 1980––it had created huge, profitable, electrically related product-businesses, via major product-innovations. However, after growth slowed in the 1970s, the impatient, aggressive Jack Welch and successor Jeff Immelt essentially abandoned that earlier strategy. Instead, in its second era––1981-2010––GE followed Welch’s radical, hybrid strategy, leveraging the strengths of its traditional product-businesses to dramatically expand its financial ones.

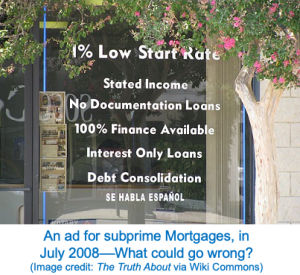

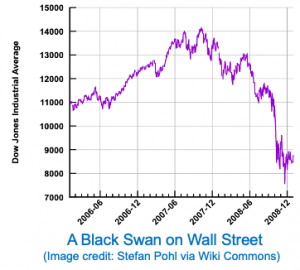

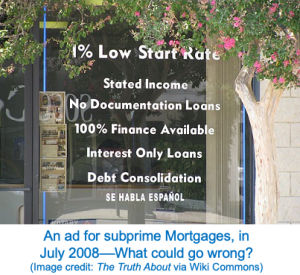

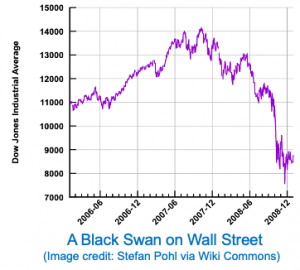

This ingenious strategy made GE the world’s most valued and admired corporation by 2000. Yet, longer-term it proved a major error. GE’s financial business could not sustain its hyper-fast growth much past 2000. Finally––riskier than the company had understood––this high-flying engine of the company’s growth largely collapsed in the 2008-2010 crisis.

In its third era––2011-2018––GE could have returned to its highly successful, first-era strategy. Instead, although downsizing its financial business, GE otherwise continued the key error of its second era––no longer creating large new, innovation-based product-businesses. GE’s star had faded somewhat, while the tech giants had begun to dominate the business-community’s imagination. GE wanted to retake leadership––so, it too would become a “digital” super-star. Welch’s GE had made a bad long-term bet on the hybrid strategy; Immelt’s GE now made another bad bet, this time, on “digital transformation.”

In this widely applauded transformation, GE formed a new business, GE Digital. It aimed to be a leading software provider, focused on reducing equipment-downtime and thus maintenance costs, and developed a major cloud-based software platform. By 2017, however, after spending close to $7 billion on this digital transformation, it was clear that GE would not reach even 10% of its $15 billion software-revenue goal. By then, only 8% of GE’s industrial customers were using its cloud-based Predix software. In 2018, when Digital was spun off, it only showed $1.2 billion in annual software revenue. More important than missing its software dreams, GE in its third era would fail to reestablish a strategy for creating large new, innovation-based product-businesses. GE’s celebrated digital transformation would ultimately prove little more than a digital delusion.

After the Crisis––An Incomplete and Misguided Strategic-Refocus

In the wake of the global financial crisis, Immelt’s team did see the need to refocus GE on its traditional––non-financial––businesses. Revenues from GEC––its financial businesses––had peaked by 2000 at about 50% of total GE. They declined in the next decade but were still over 30% of GE in 2009. Reacting to the crisis, GE exited consumer finance, and GEC dropped below 12% of GE by 2010, to dwindle further in the next few years.

As the NY Times’ Steve Lohr writes in December 2010:

So G.E. has revamped its strategy in the wake of the financial crisis. Its heritage of industrial innovation reaches back to Thomas Edison and the incandescent light bulb, and with that legacy in mind, G.E. is going back to basics. The company, Mr. Immelt insists, must rely more on making physical products and less on financial engineering… Mr. Immelt candidly admits that G.E. was seduced by GE Capital’s financial promise––the lure of rapid-fire money-making unencumbered by the long-range planning, costs and headaches that go into producing heavy-duty material goods.

However, while GE did thus deemphasize its financial-businesses, it otherwise failed to completely refocus on its first-century strategy––creating huge new product-businesses via major innovation. While it could have grown its core, mature businesses––Electric Power, Lighting, Appliances, Jet Engines, and Medical Imaging––at GDP rate (2% for 2010-2015), GE wanted much faster growth. For that, it would have needed to enter other large markets with potential GE-synergies.

Sure enough, GE under Welch and Immelt had abandoned or neglected several such markets, including semiconductors, computers, electronics, and electric vehicles. Aggressively entering or reentering some of these, GE’s strategic roots might have enabled it to again deliver superior value and create major new businesses. Unfortunately, GE did not try this complete strategic refocus, choosing instead to follow the route of digital transformation.

Sure enough, GE under Welch and Immelt had abandoned or neglected several such markets, including semiconductors, computers, electronics, and electric vehicles. Aggressively entering or reentering some of these, GE’s strategic roots might have enabled it to again deliver superior value and create major new businesses. Unfortunately, GE did not try this complete strategic refocus, choosing instead to follow the route of digital transformation.

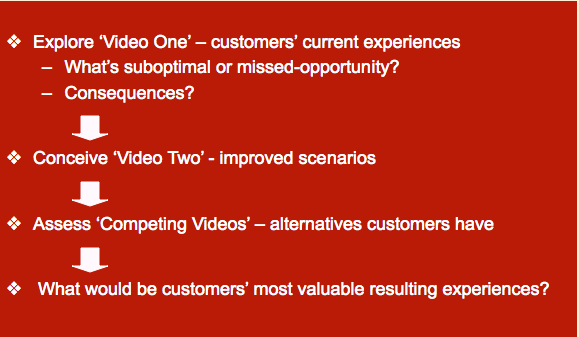

After 2011, GE foresaw the rise of the Industrial Internet, central to its vision of digital transformation. Millions of powerful-but-inexpensive sensors would be connected to industrial equipment, yielding enormous data. “Advanced Analytics,” including machine-learning and artificial intelligence (AI), could mine that Big Data for insights into equipment efficiency-and-performance improvements.

GE would, it said, become a “software and analytics company.” Starting in 2012, it had developed a cloud-based software-platform, Predix, meant to become the “operating system for the industrial internet.” By 2015, the company formed a new business, GE Digital. Hoping for major new revenues––like the earlier financial business––GE projected that it would become a “top-ten” software provider, with revenues by 2020 of $15 billion (~19% of product-business revenues). The business community and media applauded this trendy, new vision of digital transformation.

GE would, it said, become a “software and analytics company.” Starting in 2012, it had developed a cloud-based software-platform, Predix, meant to become the “operating system for the industrial internet.” By 2015, the company formed a new business, GE Digital. Hoping for major new revenues––like the earlier financial business––GE projected that it would become a “top-ten” software provider, with revenues by 2020 of $15 billion (~19% of product-business revenues). The business community and media applauded this trendy, new vision of digital transformation.

However, it was a muddled vision. GE had always supported its product businesses with a software function, but now tried to convert that support function into a separate business with its own revenues––GE Digital. This vision inevitably created confusion and conflict over the purpose of GE software applications––whether to focus primarily on supporting GE product-businesses, or on maximizing GE Digital’s software revenues.

Compounding this confusion, GE underestimated the challenges of developing all the software needed, and the need for customized––not generic––customer-solutions. Having been early to focus on the Industrial Internet, GE over-confidently underestimated the intensity of competition that would quickly emerge for its software, and already existed for the cloud-based platform. It also assumed that customers, including competitors, would share proprietary data, enabling GE’s analytics to uncover solutions But, many customers had no interest in sharing such sensitive data, nor in becoming reliant on GE software.

Foreseeing––and Betting Big On––the Industrial Internet

A 2012 GE paper predicted the onset of the Industrial Internet, which they argued would usher in a new era of innovation. This advance would be enabled in part by analytics, with their predictive algorithms. It would also be facilitated by the millions of inexpensive industrial sensors, and the connectivity provided by the Internet. In this vision, GE would play a leading role in developing and utilizing this Industrial Internet.

After Jeff Immelt announced this vision at a 2012 conference in San Francisco, the business media strongly embraced it. Soon, the Industrial Internet was also termed the Industrial Internet of Things (IIoT).  In a 2014 Fast Company article, “Behind GE’s Vision for the IIoT,” Jon Gertner looks back at that presentation:

In a 2014 Fast Company article, “Behind GE’s Vision for the IIoT,” Jon Gertner looks back at that presentation:

GE could no longer just build big machines like locomotives and jet engines and gas turbine power plants–“big iron,” … It now had to create a kind of intelligence within the machines, which would collect and parse their data. As [Immelt] saw it, the marriage of big-data analysis and industrial engineering promised a nearly unimaginable range of improvements.

GE correctly predicted that data generated by the IIoT, and analytics applied to that data, would both grow explosively. The company began investing in resources to play a leading role in the emerging IIoT. Laura Winig, in a 2016 MIT Sloan Review article, wrote that GE had “bet big” on the Industrial Internet, investing “$1 billion” adding sensors to GE machines, connecting them to the cloud and analyzing the resulting data to “improve machine productivity and reliability.” GE and others expected this digital transformation to payoff especially in one key benefit for industrial businesses––improved predictive maintenance.

Digital Transformation as Central GE Strategy

By 2016, Immelt’s team and others were referring to GE as the First Digital Industrial Company––a change they saw as a key element in digital transformation. Winig further comments on a GE advertising campaign, then recently launched:

The campaign was designed to recruit Millennials to join GE as Industrial Internet developers and remind them—using GE’s new watchwords, “The digital company. That’s also an industrial company.”—of GE’s massive digital transformation effort.

Ed Crooks, in Financial Times January 2016 discusses GE installation of systems for collecting and analyzing manufacturing data, in a growing number of its factories:

The technology is just part of a radical overhaul designed to transform the 123-year-old group [GE] into what Jeff Immelt, chief executive since September 2001, calls a “digital industrial” company. At its core is a drive to use advances in sensors, communications and data analytics to improve performance both for itself and its customers.

“It is a major change, not only in the products, but also in the way the company operates,” says Michael Porter of Harvard Business School. “This really is going to be a game-changer for GE.”

However, it is not clear in retrospect why this transformation should have been such a priority. The new digital technology could and should have been used by GE to enable the value-delivery strategies of the company’s product businesses, without transforming GE into a “Digital Industrial Company.” Nonetheless, conventional wisdom continued to insist that this digital transformation was mandatory. As the business-media and others seemed to agree––GE was ahead, and industrial businesses needed to catch up. Steve Lohr, also in 2016 in the NY Times, discussed the widespread hype for GE’s software effort, quoting another HBR professor:

“The real danger is that the data and analysis becomes worth more than the installed equipment itself,” said Karim R. Lakhani

In a 2017 Forbes article, Randy Bean and Thomas Davenport even issue a warning––GE had achieved its digital transformation:

Mainstream legacy businesses should take note. In a matter of only a few years, GE has migrated from being an industrial and consumer products and financial services firm to a “digital industrial” company with a strong focus on the “Industrial Internet” ….[achieved by] leveraging AI and machine learning fueled by the power of Big Data.

Mainstream legacy businesses should take note. In a matter of only a few years, GE has migrated from being an industrial and consumer products and financial services firm to a “digital industrial” company with a strong focus on the “Industrial Internet” ….[achieved by] leveraging AI and machine learning fueled by the power of Big Data.

Also in 2017, HBR published an interview with Immelt, How I Remade GE. It implied that GE’s digital initiative had achieved another great GE triumph, thanks to the decision by Immelt and the company to go “All In.” As Jeff explains:

We’ve approached digital very differently from the way other industrial and consumer products companies have. Most say, “We’ll take an equity stake in a digital start-up, and that is our strategy.” To my mind, that’s dabbling. I wanted to get enough scale fast enough to make it meaningful.

Thus, GE had earlier, enthusiastically launched Digital and the Predix platform business. However, by late 2017, it was dawning on observers that GE’s digital transformation might be ahead of others but lacked clear evidence of building a viable software business. Partly for this reason, Immelt would be forced out of GE later that year.

Lohr in the NY Times, later that year, writes that:

G.E. not only weathered the financial crisis but also made large investments. A crucial initiative has been to transform G.E. into a “digital-industrial” company, adding software and data analysis to its heavy equipment. But the digital buildup has been costly. G.E. will have invested $6.6 billion, from 2011 through the end of this year, with most of the spending in the last two years… Such investments, however, had a trade-off, as they sacrificed near-term profit for a hoped-for future payoff.

By 2018, the business media began to note the apparently wide-spread failure of digital transformations. Many consultants and academics had become devoted to the view that such transformation was mandatory, so many argued (and still do today) that companies needed to try harder. However, the obstacles seemed to be formidable, as numerous companies fell short. In 2018, Davenport and George Westerman would write in HBR:

In 2011, GE embarked upon an ambitious attempt to digitally transform its product and service offerings. The company created impressive digital capabilities, labeling itself a “digital industrial” company, embedding sensors into many products, building a huge new software platform for the Internet of Things, and transforming business models for its industrial offerings. The company received much acclaim for its transformation in the press (including some from us). However, investors didn’t seem to acknowledge its transformation. The company’s stock price has languished for years, and CEO Jeff Immelt…recently departed the company under pressure from activist investors.

Most fundamentally, GE’s Digital venture and its pursuit of digital transformation did not help the company redirect its strategy toward creating large new, innovation-based product-businesses. More specifically, GE in its third strategic era––2011-2018––made three bad bets in particular, focused on overestimations of: the value of reduced equipment-downtime; the willingness of customers to share proprietary information; and GE’s ability to provide a universal operating system for the industrial internet.

GE’s Bad Bet on the Inherent Universal Value of Reduced Downtime

Industrial companies had long worked to improve predictive maintenance (PM)––predicting and avoiding equipment-failure, thus reducing unplanned downtime and maintenance costs. After about 2010, improved PM was enabled, in principle, by the widespread advances in quantities of Big Data collected, and in the power of analytics. Savings in GE’s own manufacturing seemed possible, but potential customer-value seemed especially exciting. As Immelt explains in his 2014 Fast Company interview:

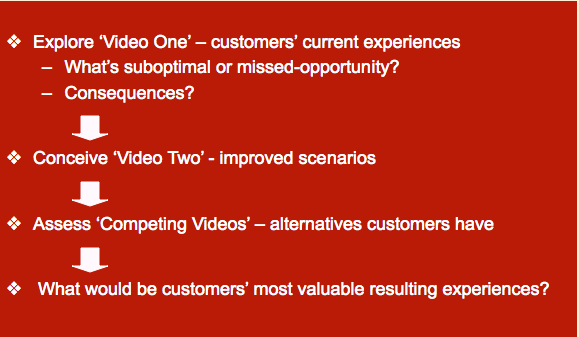

Being able to walk into the offices of an airline or a freight CEO and tell him that data might ensure that GE jet engines or locomotives would have no unplanned downtime could change the way Immelt’s company does business. GE products could almost sell themselves––or, if his competitors had the capability to do this first, stop selling at all.

Given the history of GE’s product businesses since the 1980s, it was a natural extension of that evolution for Immelt’s GE to now focus heavily on PM. Under Welch, the company had reduced its commitment to major advances in the benefits delivered by its products, shifting its relative focus to service benefits. Not surprisingly, GE now saw improved PM as a major prize of digital transformation. Winig explains the value of PM:

While many software companies like SAP, Oracle, and Microsoft have traditionally been focused on providing technology for the back office, GE is leading the development of a new breed of operational technology (OT) that literally sits on top of industrial machinery. Long known as the technology that controls and monitors machines, OT now goes beyond these functions by connecting machines via the cloud and using data analytics to help predict breakdowns and assess the machines’ overall health.

Therefore, improving PM of a customer’s GE equipment, via Big Data and analytics, made sense––but only if the obstacles and cost of doing so were outweighed by the benefits of reduced unplanned-downtime. This result turned out to be disappointingly problematic.

First, reducing an industrial-customer’s unplanned downtime is highly valuable only in cases where that downtime would be crucially disruptive and expensive for that customer. Such a result can occur, for example, when that unplanned downtime of some equipment shuts down an entire operation for a significant time period. However, when the customer’s operation has ready access to replacement or alternative equipment, the total costs of the unplanned downtime maybe fairly minor. In such a case, the cost of achieving highly accurate PM may not be justified.

GE’s Bad Bet That Industrial Customers Would Willingly Share Proprietary Info

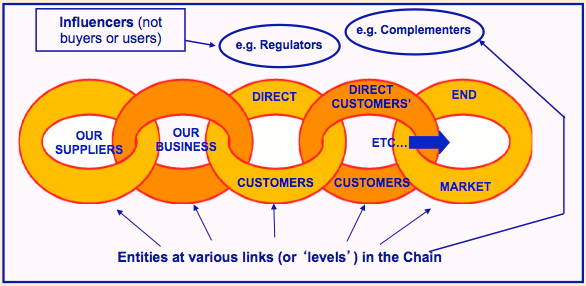

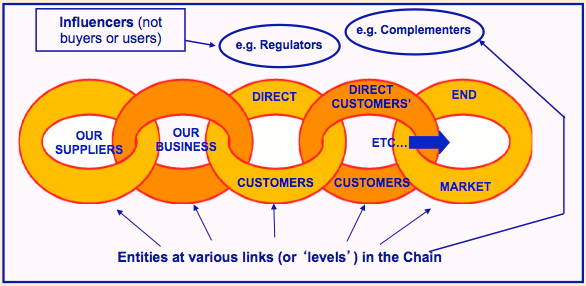

On the other hand, in some cases, eliminating or greatly reducing unplanned downtime––such as via great PM––has great value for the customer. However, this great PM depends on access to extensive customer-data that can be used to analyze the customer’s equipment and predict likely breakdowns. For such data, GE realized that it needed to include customer data from much more than its own equipment. As Winig continued:

GE had spent years developing analytic applications to improve the productivity and reliability of its own equipment…GE’s strategy is to deploy these solutions and then expand to include non-GE plant equipment as part of the solution. GE wants to go beyond helping its customers manage the performance of individual GE machines to managing the data on all of the machines in a customer’s entire operation. Customers are asking GE to analyze non-GE equipment because those machines comprise about 80% of the equipment in their facilities. They’re saying, ‘It does me no good if my $10 million gas turbine runs at 98% reliability if I have a valve that fails and shuts my entire plant down.’

Therefore, to conduct the analysis needed to improve a customer’s PM, GE needed access to data from all a customer’s equipment––including non-GE equipment, even competitors’ machines. This requirement would pose a bigger conflict with customers’ priorities than GE first anticipated. As Winig’s Sloan Review colleague Sam Ransbotham adds:

Unsurprisingly, GE has struggled with this step, as everyone loves the idea of benefiting from everyone else’s data, but is far less excited about sharing their own—a tragedy of the commons. The potential is there, but incentives are not well aligned.

GE never made much progress convincing customers to disregard this conflict. Moreover, collecting and analyzing the data needed to improve PM proved more complex than GE expected.  Retrospectively in 2019, Brian Bunz in IoT World Today commented that we can still expect additional progress in PM, but that applying machine learning, including for PM, is often dauntingly complex. He cited a Bain & Co. study among 600 high-tech executives, finding that IIoT and PM in particular are often complex to implement, frustrating the effort to capture valuable insights.

Retrospectively in 2019, Brian Bunz in IoT World Today commented that we can still expect additional progress in PM, but that applying machine learning, including for PM, is often dauntingly complex. He cited a Bain & Co. study among 600 high-tech executives, finding that IIoT and PM in particular are often complex to implement, frustrating the effort to capture valuable insights.

GE’s Bad Bet on Providing the Operating System for the Industrial Internet

In an important aspect of its digital transformation, GE assumed it needed to build a new software platform that could handle massive data sets, enabling the connection and analysis of that data. Thus, GE thought, such a platform could allow the key, powerful analyses that would improve equipment efficiency and performance. Thus, such a platform, as envisioned by GE, could become the “operating system of the Industrial Internet.” The company therefore began to build such a platform, which it named Predix.

However, playing such a central role for most or all industrial companies––including many competitors to GE––was not a realistic goal. And more importantly, playing this “operating system” role was not necessary. Many observers have speculated on why Predix failed, asking in effect what the Predix value proposition was, and why it wasn’t well enough delivered. More fundamentally, however, Predix and such an operating system did not have a reason for being and thus no superior value proposition it could deliver.

GE, nonetheless, concluded that it should and could gain a dominant position in cloud computing, as part of becoming the operating system of the Industrial Internet, and so it launched Predix in 2013. For The Street in June 2015, James Passeri writes that:

General Electric is betting its cloud-based Predix platform will do for factories what Apple’s iOS did for cell phones…GE has rolled out 40 apps this year for industrial companies using its rapidly growing industrial software platform, which combines device sensors with data analytics to optimize performance and extend equipment life…

General Electric is betting its cloud-based Predix platform will do for factories what Apple’s iOS did for cell phones…GE has rolled out 40 apps this year for industrial companies using its rapidly growing industrial software platform, which combines device sensors with data analytics to optimize performance and extend equipment life…

However, digital giants were already providing and investing heavily in cloud-based services. Amazon Web Services (AWS) launched in 2006, Microsoft’s Azure in 2008, followed by Google and later Siemens, IBM, and others. Yet, GE seemed to imagine that since it focused on industrial––not consumer––data, it need not compete with established cloud players. Passeri quotes Bill Ruh, GE’s VP of global software, on the supposedly unique advantages of Predix:

“In the future, it’s going to be about taking analytics and making that part of our product line like a turbine, an engine or an MRI” machine… “This cloud is purpose-built for the industrial world as other clouds are built for the consumer world,” he said.

In 2016, GE and some observers believed that Predix put GE in position to lead and even control most or all IIoT software and its use. Winig, in MIT Sloan Review, comments that:

GE executives believe the company can follow in Google’s footsteps and become the entrenched established platform player for the Industrial Internet— and in less than the 10 years it took Google.

GE may have seen Microsoft as an even more relevant model for GE’s possible domination of IIoT software. Immelt in his 2017 retrospective interview in HBR continues:

When we started the digital industrial move, I had no thought of creating the Predix platform business. None. We had started this analytical apps organization. Three years later some of the people we had hired from Microsoft said, “Look, if you’re going to build this application world, that’s OK. But if you want to really get the value, you’ve got to do what Microsoft did with Windows and be the platform for the industrial internet.” That meant we would have to create our own ecosystem; open up what we were doing to partners, developers, customers, and noncustomers; and let the industry embrace it.

So we pivoted. Again we went all the way. We not only increased our investment in digital by an order of magnitude—a billion dollars—but also told all our businesses, “We’re going to sunset all our other analytics-based software initiatives and put everybody on Predix, and we’re going to have an open system so that your competitors can use it just like you can.”

However, Predix never became a widely accepted product, so it lacked the marketplace leverage of Microsoft’s Windows. Moreover, Predix’s success required access to industrial customers’ proprietary data, the same obstacle that generally inhibited GE’s success with Predictive Maintenance, as discussed earlier. In 2016, The Economist writes:

Whereas individual consumers are by and large willing to give up personal information to one platform, such as Google or Facebook, most companies try to avoid such lock-in. Whether they are makers of machine tools or operators of a factory, they jealously guard their data because they know how much they are worth.

Whereas individual consumers are by and large willing to give up personal information to one platform, such as Google or Facebook, most companies try to avoid such lock-in. Whether they are makers of machine tools or operators of a factory, they jealously guard their data because they know how much they are worth.

By 2017, it was becoming clear that the triumph of Predix would be deferred, partly reflecting this conflict between the vision GE wanted and some of the key priorities of customers. In June of 2017, Ed Crooks in Financial Times writes that:

Last year there were 2.4bn connected devices being used by businesses, and this year there will be 3.1bn, according to Gartner, the research group. By 2020, it expects that number to have more than doubled to 7.6bn. …[However] the shiny digital future is arriving later than some had hoped. The potential is real, says McKinsey senior partner Venkat Atluri, but industrial companies have been slow to exploit it for a variety of reasons. They may need to change their organisations radically to benefit from the new technologies. Another obstacle is that there are so many different products and services available that industrial standards have not yet emerged.

Working with expensive and potentially hazardous machinery, industrial businesses are cautious about entrusting critical decisions to outsiders. “Customers are risk-averse, because they have to be,” says Guido Jouret, chief digital officer of ABB. “If you do something wrong, you can hurt people.”

Potential customers are also very cautious about control of the data that reveal the inner workings of their operations.

Eventually, it sank into GE that competing with the established cloud providers was not realistic, and that establishing a dominant platform was in any case pointless. Alwyn Scott in Reuters wrote later in 2017 regarding GE missteps with Predix:

Engineers initially advised building data centers that would house the “Predix Cloud.” But [after Amazon Microsoft spent billions] on data centers for their cloud services, AWS and Azure, GE changed course…abandoned its go-it-alone cloud strategy a year ago. “That is not an investment we can compete with,” Ruh said.

Engineers initially advised building data centers that would house the “Predix Cloud.” But [after Amazon Microsoft spent billions] on data centers for their cloud services, AWS and Azure, GE changed course…abandoned its go-it-alone cloud strategy a year ago. “That is not an investment we can compete with,” Ruh said.

It now relies on AWS & expects to be using Azure by late October. As GE pivoted away from building data centers…focused on applications, which executives now saw as more useful for winning business and more profitable than the platform alone. “That is probably the biggest lesson we’re learned,” Ruh said. [Predix] also faced legacy challenges in adapting to Predix software. GE has many algorithms for monitoring its machines, but they mostly were written in different coding languages and reside on other systems in GE businesses. This makes transferring them to Predix more time consuming.

An additional complexity facing GE Digital was that the company’s businesses were of course varied, requiring a range of solutions and value propositions. Related to this issue, tech writer and follower of the IIoT, Stacy Higginbotham, observed that GE’s IIoT effort cannot work with the same solution for every customer (or segment). She writes in late 2017 that:

Since launching its industrial IoT effort five years ago, GE has spent billions selling the internet of things to investors, analysts and customers. GE is learning that the industrial IoT isn’t a problem that can be tackled as a horizontal platform play. Five years after it began, GE is learning lessons that almost every industrial IoT platform I’ve spoken with is also learning. The industrial IoT doesn’t scale horizontally. Nor can a platform provider compete at every layer. What has happened is that at the computing [layer,] larger cloud providers like Microsoft and Amazon are winning.

So when it comes to the industrial IoT, the opportunity is big. It’s just taking a while to figure out how to attack the market. Step one is realizing that it’s silly to take on the big cloud and connectivity providers. Step two is quitting the platform dreams and focusing on a specific area of expertise.

In a similar, common-sensical observation on Medium, product-management and innovation writer Ravi Kumar writesthat GE’s IIoT platform failed because GE tried:

Supporting too many vertical markets: It’s difficult to build a software platform that works across many verticals. GE tried to be everything to everyone and built an all purpose platform for the wider industrial world, which was a diffused strategy.

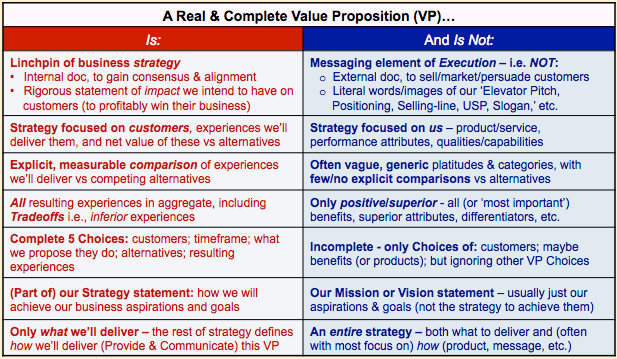

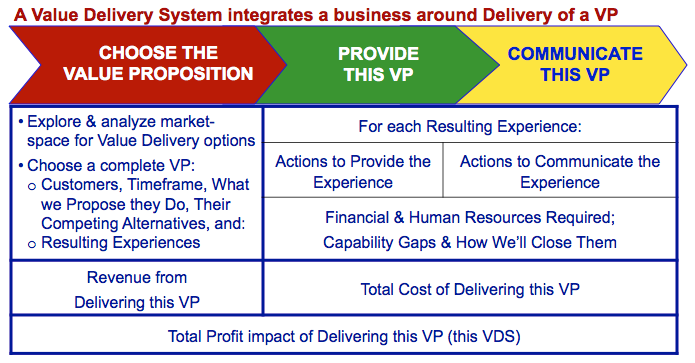

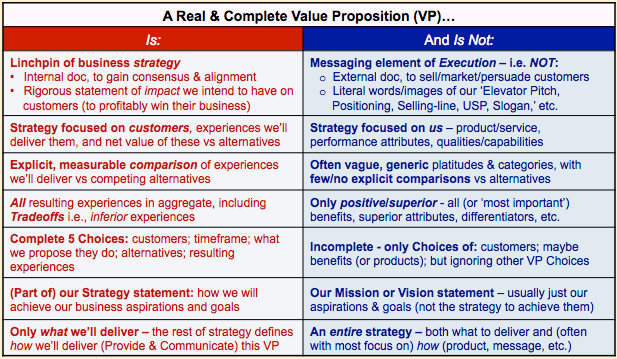

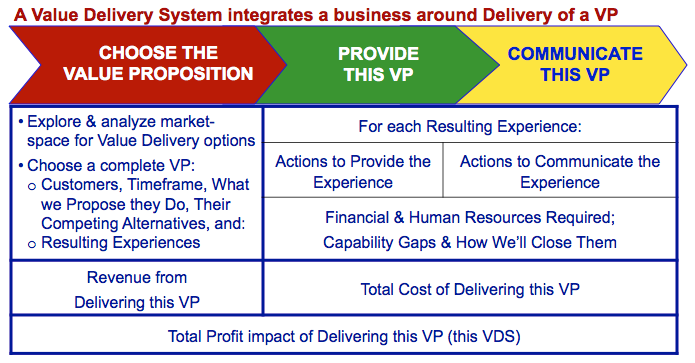

In retrospect, it would appear that GE failed to look at its venture into Predix in some fundamental ways; ways that a value delivery assessment could very well have identified. There seemed to be an assumption that customers would benefit from Predix rather than a rigorous description of those benefits and the possibly superior value proposition of Predix, and trade-offs that customers would need to make.

However, at least one company, widely applauded, recognized the real potential of the new digital technology. It did so––in contrast to GE––by using IIoT and analytics to enable the strategy of its existing business, not to build a new, separate “digital business.”

Caterpillar––Unlike GE––Used IIoT & Analytics to Enable Its Strategy

Prior to 2012, Caterpillar––the large manufacturer of construction and mining equipment––had long delivered a superior value proposition. Cat’s business model had produced lower life-time cost for customers, via superior equipment-uptime. As Geoff Colvin explains in Fortune in 2011:

Prior to 2012, Caterpillar––the large manufacturer of construction and mining equipment––had long delivered a superior value proposition. Cat’s business model had produced lower life-time cost for customers, via superior equipment-uptime. As Geoff Colvin explains in Fortune in 2011:

The model’s goal is simple: Ensure that customers make more money using Cat equipment than using competitors’ equipment. Though Cat equipment generally costs more than anyone else’s, the model requires it to be the least expensive over its lifetime. For customers, maintenance and uptime are critical.

The model’s goal is simple: Ensure that customers make more money using Cat equipment than using competitors’ equipment. Though Cat equipment generally costs more than anyone else’s, the model requires it to be the least expensive over its lifetime. For customers, maintenance and uptime are critical.

Kenny Rush, vice president of Sellersburg Stone in Louisville, says that’s why he’s such a fan of the Caterpillar 992 loader his firm uses in a quarry. “We’ve run that machine since 1998, and it’s had 98% to 99% availability,” he says. “It cost $1.5 million. But we ran it 22,000 hours, which is about 10 years, before replacing any major components.” Dealers are key to those economics. A machine that breaks down can halt an entire job, and getting back under way in two hours rather than 48 hours means big money. Large, successful dealers that carry lots of parts, maintain skilled technicians, and move fast are thus a major selling point, and Cat’s dealer network is the undisputed best in the business.

This one clear value proposition––superior equipment-uptime––applied to essentially all of Cat’s customers. Now, after 2012, Cat recognized that improving PM––via analytics applied to massive IIoT-data––would perfectly build on and strengthen Cat’s earlier, uptime-focused strategy. Cat should not be understood as having succeeded in executing the digital transformation strategy that GE had tried but failed to execute. Rather, Cat pursued a strategy focused on delivering its established value proposition of superior uptime, but using the new digital technology to help.

At this time, GE also tried to use the digital technology, but primarily to achieve “digital transformation,” chasing a vague notion of digital strategy. Cat instead integrated the technology into its existing business, enabling rather than replacing its strategy that had long focused on making customers’ more successful.

In doing so, Cat––unlike GE––did not try to create the software it needed but more realistically partnered with an analytics start-up firm, Uptake. As Lindsay Whipp writes in Financial Times, in August 2016:

In doing so, Cat––unlike GE––did not try to create the software it needed but more realistically partnered with an analytics start-up firm, Uptake. As Lindsay Whipp writes in Financial Times, in August 2016:

Uptake, which was reported last year to have a $1bn valuation and in which Caterpillar has an undisclosed stake, uses its proprietary software to trawl the immense amount of data collected from Caterpillar’s clients to predict when a machine needs repairing, preventing accidents and extended downtime, and saving money.

Uptake, which was reported last year to have a $1bn valuation and in which Caterpillar has an undisclosed stake, uses its proprietary software to trawl the immense amount of data collected from Caterpillar’s clients to predict when a machine needs repairing, preventing accidents and extended downtime, and saving money.

Caterpillar conducted a study of a large mining company’s malfunctioning machine, which had resulted in 900 hours of downtime and $650,000 in repairs. If the technology from the two companies’ project had been applied, the machine’s downtime would have been less than 24 hours and the repairs only $12,000.

Additional articles in 2017, in Forbes, WSJ, and Business Insider emphasized Cat’s improvements in PM via analytics applied to IIoT-data. GE continued generating hype around its software promises of IIoT applications and the utility of Predix––the “operating system for the industrial internet.” More modestly, but delivering more substance, Cat only focused on delivering its specific value proposition for its users. As Tom Bucklar, Cat’s Director of IoT, explains in a 2018 article in Caterpillar News:

When we start to talk about our digital strategy, we really look at digital as an enabler. At the end of the day, we’re not trying to build a digital business. We’re trying to make our customers more profitable.

However, for many industrial businesses––including most of GE’s businesses during this 2011-2018 era––the value of uptime for its equipment varies. Unplanned downtime always has some cost but avoiding it––such as by analytics––does not always justify major investments. In many cases, an operation can affordably work its way around unplanned downtime on a piece of equipment. As Kim Nash, quoting Cat’s Bucklar, explains in WSJ, some of Cat’s most complex machines may be a customer’s most crucial ones. For those, downtime is unaffordable, but a simpler machine could be easily replaced. In that case, there will not be “as much customer value in that prediction.”

However, for many industrial businesses––including most of GE’s businesses during this 2011-2018 era––the value of uptime for its equipment varies. Unplanned downtime always has some cost but avoiding it––such as by analytics––does not always justify major investments. In many cases, an operation can affordably work its way around unplanned downtime on a piece of equipment. As Kim Nash, quoting Cat’s Bucklar, explains in WSJ, some of Cat’s most complex machines may be a customer’s most crucial ones. For those, downtime is unaffordable, but a simpler machine could be easily replaced. In that case, there will not be “as much customer value in that prediction.”

Thus, GE could not have simply applied Cat’s specific, superior-uptime value proposition to GE’s businesses, each of which needed to deliver their own specific value proposition. Cat saw it could enable its specific, uptime-focused strategy, via Big Data and analytics. Confused, GE equated its strategy with using data and analytics, and pursuing digital transformation, applying the same, generic digital strategy for all customers.

Caterpillar did not need to develop a software platform––it simply needed to deliver its clear, superior value proposition. Likewise, GE did not need to make the Predix platform work–––GE needed to choose a clear value proposition in each major business and then use the digital technology to help deliver each of those propositions successfully.

GE failed in two key ways. First, it failed to focus on specific strategies to profitably deliver superior value in its various businesses. And, secondly, it failed to then use digital technology and capabilities to enable those value-delivery strategies. This mentality, prioritizing the enabling of their strategy rather than prioritizing digital assets and skills, characterizes the approach that Caterpillar––in contrast to GE––so successfully followed. Some observers would argue today that Cat succeeded in a “digital transformation,” but it did not really transform––it simply focused on using analytics to better execute its clear, customer-focused, uptime strategy. Thus, GE failed by focusing too much on its digital transformation, working to become the first digital industrial company, and not enough to develop its value-delivery strategies.

Bitter End to GE’s Digital Delusions

Many observers, after Immelt had been forced out in 2017, continued to see GE Digital and the company’s attempted digital transformation as good ideas, executed badly. Probably the whole initiative could have been pursued more effectively, but the main problem with this third GE strategic era, as with GE’s second era––1981-2010––was the fundamental flaw in the company’s strategy. It wasn’t totally wrongheaded to pursue the development and use of the IIoT and analytics. It was wrong, however, to put this pursuit ahead of restoring GE’s historically successful strategy of creating large new, innovation-based product businesses.

However, the modest fiasco of the digital delusion was not the only major strategic error by Immelt and team after the financial crisis. The company would also fail to rethink its key energy-related strategies. Again chasing after the formulae that had worked in the past, GE’s blunders in this area would prove terminal for Immelt, and leave the company exposed to a final, predictable but misguided restructuring. We tell this final piece in the story of GE’s rise and decline in Part Four, Energy Misfire (2001-2019).

Part Two of Value-Delivery in the

Rise-and-Decline of General Electric

Illusions of Destiny Controlled & the World’s Real Losses

By Michael J. Lanning––Copyright © 2022 All Rights Reserved

This is Part Two of our four-part series on GE’s great rise and eventual dramatic decline, seen through our value-delivery lens. Businesses as much as ever need strategies that deliver superior value to customers, including via major product-innovation. Though now a faded star, GE’s lengthy history offers striking lessons on this fundamental challenge. Our two recent posts in this series provide an Introductory Overview and then Part One on GE’s first century. (Note from the author: special thanks to colleagues Helmut Meixner and Jim Tyrone for tremendously valuable help on this series.)

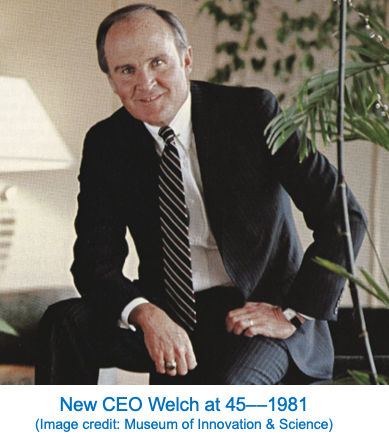

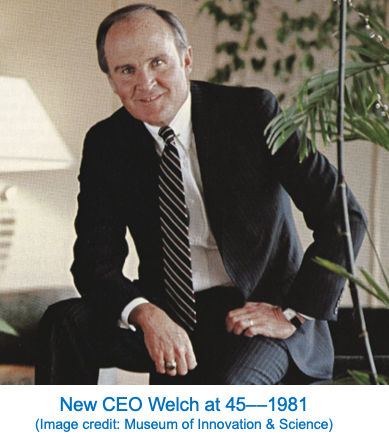

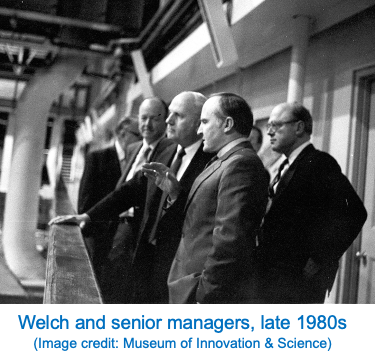

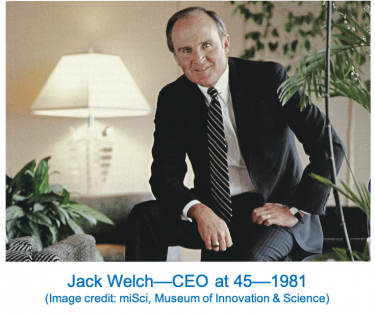

When Jack Welch became CEO in 1981, GE was already a leading global firm, with revenues of $27 billion, earnings of $1.6 billion, and market value of $14 billion. Led by Welch, GE then implemented an ingenious strategy that produced legendary profitable growth. By 2000, GE had revenues of $132 billion, earnings of $12.7 billion, and a market value of $596 billion, making it the world’s most valuable company. Yet, this triumphant GE strategy would ultimately prove unsustainable and self-destructive.

Not widely well understood, the Welch strategy was a distinct hybrid. It leveraged GE’s traditional, physical-product businesses––industrial and consumer––to rapidly expand its initially-small, financial-service businesses, thereby accelerating total-GE’s growth. At the same time, it grew those product-businesses slowly but with higher earnings for GE, by cutting costs––including reduced focus on product-innovation. Thus, for over two decades after 1980, this hybrid-strategy produced the corporation’s legendary, profitable growth.

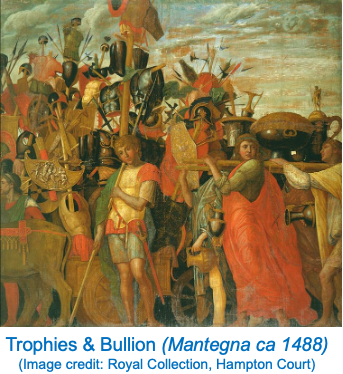

Welch did not fully explain this partially opaque and––given GE’s history––surprisingly financial-services oriented strategy. Instead, his preferred narrative was simpler and less controversial. It primarily credited GE’s great success to Welch’s cultural initiatives––not to the hybrid strategy––and emphasized total-GE growth more than that of the financial-businesses. Yet, the hybrid strategy and the crucial growth of the financia l-businesses were the real if partially obscured key to GE’s growth. The initiatives did improve GE’s culture but played little role in that growth. Still, fans embraced this popular, reassuring narrative about initiatives, helping make GE the most admired––not just most valuable––company. Like the ultimately unsustainable Roman triumphs of Caesar, much of the world would hail Welch’s GE success, without recognizing its longer-term flaws.

l-businesses were the real if partially obscured key to GE’s growth. The initiatives did improve GE’s culture but played little role in that growth. Still, fans embraced this popular, reassuring narrative about initiatives, helping make GE the most admired––not just most valuable––company. Like the ultimately unsustainable Roman triumphs of Caesar, much of the world would hail Welch’s GE success, without recognizing its longer-term flaws.

The Welch strategy seemed to triumph for over two decades, but eventually proved a tragic wrong turn by GE––and a misguided strategic model for much of the business world. In its first century, GE built huge, reliably profitable product-businesses, based on customer focused, science based, synergistic product-innovation. Now, its new hybrid of physical-product-and-financial businesses deemphasized much of this highly successful approach. For over twenty years after 1980, it leveraged GE’s product-business strengths to enable breath-taking growth of its financial-businesses––from $931 million in 1980 to over $66 billion in 2000––in turn driving total-GE’s spectacular, profitable growth.

However, this apparent, widely celebrated triumph was in fact a major long-term strategic blunder. It replaced GE’s great focus on product-innovation-driven growth with a focus on artificially engineered, financial-business growth. That hybrid-strategy triumph produced impressive profitable growth for two decades but was ultimately unsustainable. Its growth engine was inherently limited––unlikely to sustain major growth much beyond 2000––and self-destructive, entailing higher-than-understood risk. Most fundamentally, however it provided no adequate replacement for the historic, product-innovation heart of GE strategy.

Welch-successor Jeff Immelt and team recognized some of the limitations and risks of the hybrid strategy but lacked the courage and vision to fully replace it. In the 2008-09 financial crisis, the strategy’s underestimated risks nearly destroyed the company. GE survived, partially recovering by 2015, but having let product-innovation capabilities atrophy, GE was unprepared to create major new businesses. After 2015, lingering financial risks and new, strategic blunders would further sink GE, but its stunning decline traces most importantly to Welch’s clever yet long-term misguided, hybrid strategy.

GE’s 1981 crossroads––drift further or refocus on its electrical roots?

The GE that Jack Welch inherited in 1981 had always been a conglomerate––a multi-business enterprise. Yet, in GE’s first century it had avoided the strategic incoherence often associated with conglomerates––often unrelated businesses, assembled only on superficial financial and empire-building criteria. In contrast, though technologically diverse, most GE businesses pre-1981 shared important characteristics––mostly all manufacturing products with deep roots in electricity or electromagnetism. They thus benefited from mutually reinforcing, authentically synergistic relationships.

By the 1970s, however, GE had begun slightly drifting from those electrical roots, losing some synergy and coherence. GE plastics, originally developed for electrical insulation, evolved into large businesses mostly unrelated to electrical technology. More importantly, however, GE failed to persist in some key electrical markets. Falling behind IBM, GE exited computers in 1970. Despite software’s unmistakable importance, the company failed to develop major capabilities or business in this area. GE’s Solid State electronics division pursued semiconductors, but with limited success by the late 1970s. These markets were competitively challenging but also essential to digital technology, which has since come to dominate much of the world’s economy. New GE efforts in these markets might well have failed, but few firms had GE’s depth of capabilities, if it had possessed the will to succeed.

In 1980, GE businesses were dominantly electrical- and electromagnetic-related. 84% of revenues were: Power; Consumer (lighting and appliances); Medical Imaging; and Aircraft Engines––linked by turbine technology to Power. 13% were plastics and mining (a major 1976 acquisition). 3% were GE Credit Corp, financing customer purchases. Thus, GE faced a strategic crossroads as Welch took the helm––continue drifting from or redouble its historical focus on product innovation in its electrical roots.

To pursue the second option, Welch and team would have needed to deeply explore the evolving preferences and behaviors of customers in electrical markets, including digital ones neglected by GE. This team might have thus discovered new value propositions, deliverable via major product innovations, reinvigorating GE growth in its core markets.

Instead, Jack Welch led the company in a new strategic direction––further from its roots. It divested mining, but increasingly embraced non-electrically based businesses––expanding plastics and pursuing the glamourous yet strategically unrelated broadcasting-business, acquiring NBC in 1986.

in a new strategic direction––further from its roots. It divested mining, but increasingly embraced non-electrically based businesses––expanding plastics and pursuing the glamourous yet strategically unrelated broadcasting-business, acquiring NBC in 1986.

After the mid-1990s, moreover, GE widened its product-businesses’ focus––from primarily manufacturing-based, superior product-performance to include expanded industrial services. These proved more profitable and less reliant on product innovation.

Especially crucial, however, the new Welch strategy would also quietly but aggressively expand GE’s financial services. In his 2001 autobiography Jack: From the Gut (Warner Books), Welch recalls his first impressions of that initially unfamiliar business:

Of all the businesses I was given as a sector executive in 1977, none seemed more promising to me than GE Credit Corp. Like plastics, it was well out of the mainstream…and I sensed it was filled with growth potential… My gut told me that compared to the industrial operations I did know, this business seemed an easy way to make money. You didn’t have to invest heavily in R&D, build factories, and bend metal day after day. You didn’t have to build scale to be competitive. The business was all about intellectual capital—finding smart and creative people and then using GE’s strong balance sheet. This thing looked like a “gold mine” to me.

More difficult to execute than implied by this comment, the hybrid strategy nonetheless worked almost magically well, for two decades. GE Credit Corp (GECC)––later renamed and now termed here, in short, GEC––aggressively leveraged key strengths from GE’s traditional product-businesses. Thus, GEC would produce most total-GE growth in the Welch era, driving the company’s astounding market-value. Yet, with this hybrid strategy GE would eventually lose some of its strategic coherence, its businesses less related, while growth proved unsustainable much beyond 2000 and risk was higher-than-understood.

Meanwhile, GE’s ability to profitably deliver superior value––via customer focused, science based, synergistic product-innovation––had deteriorated. GE had taken a major wrong turn at its 1981 crossroads and would eventually find itself at a strategic dead-end.

Real cause of GE triumph––Welch-initiatives or the hybrid-strategy?

After 1981, Welch launched a series of company-wide cultural initiatives, broadly popular and emulated in the business community. These included the especially-widely adopted Six Sigma product-quality methodology. Another initiative mandated that each GE business must achieve a No. 1 or No. 2 market-share or close the business. Globalization pushed to make GE a fully global firm. Workout involved front-line employees to adopt Welch’s vision of a less bureaucratic culture. Boundaryless encouraged managers to freely share information and perspective across businesses. Services aimed to make service, like new equipment, a central element in GE product-businesses, investing in service technologies and rapidly expanding service revenues. E-business, by the late 1990s, encouraged enthusiastic engagement with e-commerce.

These initiatives likely helped make GE’s culture less bureaucratic, more decisive, and efficient. However, as GE business results soared, the initiatives were also increasingly credited––but unjustifiably––as a primary cause of those results. A leading popularizer of this misguided  narrative was Michigan business-professor Noel Tichy. His books hagiographically extolled the Welch approach. In the mid-1980s Tichy led and helped shape GE’s famous Crotonville management-education center, where GE managers were taught that the initiatives were key to GE’s success. Welch agreed, writing in Jack:

narrative was Michigan business-professor Noel Tichy. His books hagiographically extolled the Welch approach. In the mid-1980s Tichy led and helped shape GE’s famous Crotonville management-education center, where GE managers were taught that the initiatives were key to GE’s success. Welch agreed, writing in Jack:

In the 1990s, we pursued four major initiatives: Globalization, Services, Six Sigma, and E-Business… They’ve been a huge part of the accelerated growth we’ve seen in the past decade.

Many shared this view, e.g., in 2008, strategic-management scholar Robert Grant writes:

Under Welch, GE went from strength to strength, achieving spectacular growth, profitability, and shareholder return. This performance can be attributed largely to the management initiatives inaugurated by Welch.

In 2005, a Manchester U. team led by Julie Froud reviewed the over fifty books and countless articles by management experts on GE in the Welch era. Work of Froud’s team debunked the mythology of credit given to the initiatives, finding a repeated pattern in that literature of blatantly confusing correlation with causation. Consistently––but incompetently––writers first cited GE’s inarguably impressive business results, then juxtaposed them with the initiatives––implying causality. For example, the team cites 2003 work by Paul Strebel of Swiss IMD Business School:

Strebel’s first shot announces GE’s undisputed achievement which is “two decades of high powered growth” … In the second shot, Strebel identifies key initiatives… as “trajectory drivers” that allowed the company to engineer upward shifts in “product/market innovation.”

However, the initiatives did not cause GE’s profitable growth of this era. If they had, we should find similar, dramatic growth across GE’s major business-sectors––since the initiatives were implemented throughout GE. Its product-business sector consisted of Power, Consumer, Medical Imaging, Aircraft Engines, Plastics, and Broadcasting. Its financial-business sector was GEC. As shown below, these two sectors in 1980-2000 grew at radically different rates. Product-businesses grew by +175%––merely in line with other US manufacturers––while GEC grew at the astounding rate of more than +7,000%.

GE Sales (Nominal $) by sector––1980-2000

|

Total-GE––Product-Bus’s + GEC |

GE Product-Businesses |

GEC (Financial Businesses) |

| 1980––$ Billions |

25.0 |

24.0 |

0.9 |

| 2000––$ Billions |

132.2 |

66.0 |

66.2 |

| Growth––$B (%) |

107.2 (530) |

42.0 (275) |

65.2 (7,108) |

Source (this and next three tables): GE Annual Reports, Froud et al, & author’s calculations

GE’s product-businesses also grew in this period, but only cyclically and dramatically slower than GEC. They grew at least 10% in only four of these twenty years, with average annual growth less than 6%. In contrast, GEC grew more than 10% every year but one (1994) with average annual growth over 26% and strong growth throughout (except 1994).

Average Annual Growth (%) by Sector––1981-2000

|

Total-GE: Product-Bus’s + GEC |

GE Product-Businesses |

GEC (Financial Businesses) |

| 1981-2000 |

8.9 |

5.7 |

26.7 |

| 1981-1990 |

9.3 |

6.7 |

36.6 |

| 1991-2000 |

8.6 |

4.7 |

16.7 |

| 1995-2000 |

13.7 |

8.6 |

22.3 |

GE Real Sales (2001 Prices, net of inflation) by sector––1980-2000

|

Total-GE: Product-Bus’s + GEC |

GE Product-Businesses |

GEC (Financial Businesses) |

| 1980––$ Billions |

57.4 |

54.4 |

3.0 |

| 2000––$ Billions |

141.5 |

72.5 |

69.0 |

| Growth $B (%) |

84.1 (246) |

18.1 (133) |

66.0 (2,280) |

| CAGR (Compound Annual Growth Rate) |

4.6% |

1.4% |

16.9% |

| Real GEC-Growth as % of Real Total-GE Growth (66.0/84.1) |

78% |

Thus, most GE real-growth in this era traced to GEC. In contrast to GEC’s more-than-2,100% real-growth, the product-businesses’ 33% paled. Those businesses’ Compound Annual Growth Rate (CAGR) in real revenues was only 1.4%, while GEC’s was 16.9% and its $66 billion real-growth was 78% of total-GE’s $84 billion real-growth. Clearly, GE’s 1981-2000 by-sector results refute the standard, initiatives-focused GE narrative.

GE’s great, profitable growth during this period primarily reflected––not the Welch initiatives––but his real, hybrid strategy. To comprehend GE’s phenomenal rise, and eventual decline, today’s managers need to understand that hybrid strategy.

A Hybrid Product/Financial-Business––the Real Welch-Strategy

In 1987, GECC was renamed GE Capital Services––“Capital” to many and here simply termed GEC. By then it had evolved into a large, fast-growing multi-unit financial-business, with $3.9 billion revenues––about 10% of total GE. As Froud et al discuss, GEC had developed a wide range of financial services, including for example:

1967––the start of airline leasing with USAir; subsequently leading to working capital loans for distressed airlines [1980s] … By 2001…managed $18 billion in assets

1983––issues private-label credit card for Apple Computer; first time a card was issued for a specific manufacturer’s product

1980s––employers’ insurance, explicitly to help offset cyclicality in the industrial businesses

1980s––became a leader in development of the leveraged buyout (LBO)

1992––moved into mortgage insurance

1980s-90s––one of largest auto finance companies…and [briefly] sub-prime lending in autos

Though quietly leading total-GE growth throughout the Welch era, GEC was not centrally featured in GE’s primary narrative, which focused mostly on total-GE and the Welch initiatives. Nonetheless, the world increasingly noticed that GE’s initially-small financial-business––not just total-GE––was growing explosively, requiring its own explanation.

world increasingly noticed that GE’s initially-small financial-business––not just total-GE––was growing explosively, requiring its own explanation.

Thus, to explain its spectacular growth, a secondary narrative emerged emphasizing GEC’s entrepreneurial, growth-obsessed perspective, derived from GE. There is some truth in this explanation––although GEC was a financial-business, it proactively applied GE’s depth of product-business experience and skill. By 1984, Thomas Lueck in the NY Times had noted that, “…Mr. Wright said that his executives are able to rely heavily on technical experts at General Electric to help them assess the risk of different businesses and product lines.” Later, in 1998, John Curran in Fortune primarily explainsGEC’s success in such terms of experience and skills:

The model is complex, but what makes is succeed is not: a cultlike obsession with growth, groundbreaking ways to control risk, and market intelligence the CIA would kill for… [GEC CEO Wendt] readily acknowledges the benefits Welch has brought to every corner of GE: a low-cost culture and the free flow of information among GE’s divisions, which gives Capital access to the best practices of some of the world’s best industrial businesses…

All five of Capital’s top people are longtime GE employees [including three] from GE’s industrial side… This unusual combination of deal-making skill and operations expertise is one of the keys to Capital’s success. Capital not only buys, sells, and lends to companies but also, unlike love ’em and leave ’em Wall Street, excels at running them. … Says Welch: “It is what differentiates [GEC] from a pure financial house.” [GEC’s] ability to actually manage a business often saves it from writing off a bad loan or swallowing a leasing loss.

Continuing, Curran focuses on GEC’s growth-obsession, especially via acquisitions:

The growth anxiety is pervasive… Says Wendt: “I tell people it’s their responsibility to be looking for the next opportunity…” Capital’s growth comes in many forms, but nothing equals the bottom-line boost of a big acquisition. Says [EVP] Michael Neal: “I spend probably half my time looking at deals, as do people like me, as do the business leaders.”Over the past three years Capital has spent $11.8 billion on dozens of acquisitions.

Moreover, we should not underestimate the skill that GE displayed in using acquisitions to build its huge, complex collection of financial businesses. Major misjudgments “would have undermined GE’s financial record,” Froud et al write, explaining that:

By way of contrast, Westinghouse, GE’s conglomerate rival, had its finance arm liquidated by the parent company after losing almost $1 billion in bad property loans in 1990.

Therefore, these entrepreneurial, growth focused product-business skills clearly gave GEC an advantage over competing financial companies, thus helping it succeed. However, its leaders, including Bossidy, Wright, Wendt, and Welch, had the insight to see that GEC could also enjoy two other, decisive advantages over financial-business competitors. These two advantages, much less emphasized in popular explanations of GEC’s success, were its lower cost-of-borrowing and its greater regulatory-freedom to pursue high returns.

Profitability of a financial-business is determined by its “net interest spread.” This spread is the difference is between the business’ cost-of-borrowing––or cost-of-funds––and the returns it earns by providing financial services––i.e., by lending or investing those funds. GEC’s and thus GE’s growth, until a few years after 2000, was enabled by the combination of its entrepreneurial, growth-focused skills and––most importantly––the two key advantages it enjoyed on both sides of this crucial net-interest-spread.

GEC’s cost-of-borrowing advantage––via sharing GE’s credit-rating

Of course, a business’ credit rating is fundamental to its cost-of-borrowing. During and largely since the Welch era, the norms of credit-rating held that a business belonging to a larger corporation would share the latter’s credit rating––so long as that smaller business’ revenues were less than half those of the larger corporation. Thus, GEC during the Welch era shared GE’s exceptionally high––triple-A or AAA––credit rating. It thus held a major cost-of-borrowing advantage over most other financial-business competitors.

GE’s own triple-A rating reflected the extraordinary financial strength and reliability of its huge, traditional product businesses. As Froud et al write in 2005:

GE Industrial may be a low growth business but it has high margins, is consistently profitable over the cycle… This solid industrial base is the basis for GE’s AAA credit rating, which allows [GEC] to borrow cheaply the large sums of money which it lends on to…customers.

In 2008, Geoff Colvin writes in Fortune that while GEC helps GE by financing customers:

In the other direction, GE helps GE Capital by furnishing the reliable earnings and tangible assets that enable the whole company to maintain that triple-A credit rating which is overwhelmingly important to GE’s success. Company managers call it “sacred” and the “gold standard.” Immelt says it’s “incredibly important.”

That rating lets GE Capital borrow funds in world markets at lower cost than any pure financial company. For example, Morgan Stanley’s cost of capital is about 10.6%. Citigroup is about 8.4%. Even Buffett’s Berkshire Hathaway has a capital cost of about 8%. But GE’s cost is only 7.3%, and in businesses where hundredths of a percentage point make a big difference, that’s an enormously valuable advantage. [emphasis added]

While GEC maintained it, the triple-A rating––and thus, generally, lower cost-of-borrowing––was a highly valuable advantage. It allowed GEC to grow rapidly by profitably delivering superior value propositions to customers, in the form of various financial services at competitively lower costs––interest and fees. It also provided low-cost funding to enable GEC’s aggressive, serial acquisition of new companies, thus further helping GEC rapidly expand. However, sustainability of this credit-rating relied on GEC not reaching 50% of total GE’s size. Yet, GEC would approach that limit by about 2000 if it continued its torrid growth. As The Economist writes in late 2002:

As GE Capital has grown (from under 30% of the conglomerate’s profits in 1991 to 40% in 2001), its prized triple-A credit rating has come under pressure… rating agencies say that they like the way GE manages its financial businesses. But they make it clear that GE can no longer allow GE Capital to grow faster than the overall company without sacrificing the triple-A badge. (Only nine firms still have that deep-blue-chip rating.) No matter how well run, financial firms are riskier than industrial ones, so the mix at GE must be kept right, say the agencies. As usual, the agencies seem to be behind the game: the credit markets already charge GE more than the average for a triple-A borrower.

The strength of this rating declined in the rest of that decade. By 2008, many investors doubted GE’s triple-A rating. As Colvin continues:

The credibility of bond ratings in general tumbled when it was revealed that securitized subprime mortgages had been rated double- or triple-A. GE’s rating clearly meant nothing to investors who bid credit default swaps on company bonds…. The message of the markets: The rating agencies can say what they like; we’ll decide for ourselves.

Finally, GE would lose its triple-A rating as the financial crisis unfolded, in 2009. Nonetheless, GEC’s credit-rating and thus borrowing-cost advantage had been a key factor in its success until 2008 and especially in the 1981-2000 Welch era.

GEC’s returns advantage––via sharing GE’s regulatory-classification

Though less-widely known than its cost-of-borrowing advantage, GEC also held a second major advantage––greater regulatory freedom to earn high returns on its services. In this era, businesses were classified––for purposes of financial regulation––as either financial or industrial (i.e., non-financial). Though clearly selling financial services, GEC––by virtue of being a part of GE––was allowed to share the corporation’s “industrial” regulatory-classification. Echoing the credit-rating rules, GEC could sustain this classification so long as its revenues stayed below 50% of total-GE’s.

With that classification, GEC was largely free from the financial regulations facing its competitors. As early as 1981, Leslie Wayne writes in the NY Times, GEC’s “…success has drawn the wrath of commercial bankers who compete against it but face Government regulations that [GEC] does not.” The bankers had a point, still valid twenty years later. Some of these costly and restrictive regulatory requirements––faced by GEC’s competitors but avoided by GEC––included: levels of financial reserves; ratios of assets-to-liabilities; and the Federal Reserve’s oversight and monitoring of asset quality-and-valuation. Moreover, GEC’s industrial-classification gave it more freedom to aggressively expand via acquisitions and divestitures, as Leila Davis and team at U Mass, in 2014, write:

Laxer regulation compared to [that for] traditional financial institutions has allowed GE to move into (and out of) a wider spectrum of financial services, with considerably less regulatory attention, than comparable financial institutions.

However, GEC’s regulatory freedom via its industrial-classification let it save costs but only by exposing the company to higher financial risks––against which the regulations had been intended to protect “financial” businesses. GEC’s industrial classification left it freer than competitors to earn high returns, unless and until related higher risks came home to roost––as they did in 2008. An example of saving costs but increasing risk was the restructuring of GE’s balance-sheet in this era, in favor of debt. As Froud et al explain:

Because GE does not have a retail banking operation it needs large amounts of debt finance to support its activities of consumer and commercial financing. Thus, the decision to grow [GEC] has resulted in a transformation in GE’s balance sheet. Most of the extra capital comes in the form of debt not equity: at the consolidated level, equity has fallen from around 45 per cent of long term capital employed in 1980 to around 12 per cent by the late 1990s… Almost all of the liabilities are associated with GECS. This restructuring…has been achieved throu gh very large issues of debt: for example, in 1992, Institutional Investor estimated that GE issued $5 to $7bn of commercial paper [a form of inexpensive short-term financing] every day…

gh very large issues of debt: for example, in 1992, Institutional Investor estimated that GE issued $5 to $7bn of commercial paper [a form of inexpensive short-term financing] every day…

This growth in debt would typically impose costs and constraints on a company classified as “financial” but GEC’s industrial classification let it avoid most of this burden, at least until the early 2000s. This higher debt, however, did increase the level of interest-rate and other risk faced by GEC. Yet, for over twenty years there was little challenge heard to this financial restructuring. After all, GE-and-GEC results were consistently outstanding in this era, and as Froud’s team write, analysts may not have fully understood the GEC numbers:

GE is generally followed by industrial analysts because it is classed as an industrial, not a financial firm… Arguably most industrial analysts will have limited ability to understand [GEC], whose financial products and markets are both bewilderingly various and often disconnected from those in the industrial businesses.

This silence, however, was finally broken by an investor outside the analyst community, in 2002 when, as Alex Berenson writes in NY Times:

William Gross, a widely respected bond fund [PIMCO] manager, sharply criticized General Electric yesterday, saying that G.E. is using acquisitions to drive its growth rate and is relying too much on short-term financing. “It grows earnings not so much by the brilliance of management or the diversity of their operations, as Welch and Immelt claim, but through the acquisition of companies––more than 100 companies in each of the last five years––using… GE stock or cheap…commercial paper” … Though the strategy appears promising in the short run, it increases the risks for G.E. investors in the long run, he said. If interest rates rise or G.E. loses access to the commercial paper market, the company could wind up paying much more in interest, sharply cutting its profits.

Gross suggests in Money CNN that GE ignored financial regulatory-requirements:

“Normally companies that borrow in the [commercial paper] market are required to have bank lines at least equal to their commercial paper, but GE Capital has been allowed to accumulate $50 billion of unbacked [commercial paper] …” Gross said.

Of course, GEC could legally disregard this financial regulatory-requirement, thanks to its industrial classification––it was regulated as an industrial, not a financial business. Froud et al add that, “Overall Gross stated that he was concerned that GE, which should be understood as a finance company, was exposed to risks that were poorly disclosed.” Markets briefly reacted to this criticism, but GE weathered the storm easily enough.

As of 2000, the hybrid strategy had successfully produced the company’s legendary, profitable growth. However, two key aspects of GE’s situation had also changed for the worse. During the Welch era GE had significantly reduced its emphasis on product innovation. At the same time, the twenty-year lifetime of growth produced by the hybrid strategy was coming to its inevitable end. Storm clouds would soon enough hover over GE.

Deemphasis on product-innovation during the Welch era

Under Welch and the hybrid strategy, GE missed a huge opportunity to fully extend its great tradition of product-innovation into some of the most important and––for GE, intuitively obvious––electrical markets. These included computers, software, semiconductors, and others––dismissed by Welch and team as bad businesses for GE, in contrast to the “more promising” financial businesses that “seemed an easy way to make money.” Yet, those neglected electrical businesses, despite the need to “invest heavily in R&D, build factories, and bend metal day after day,” evolved into today’s world-dominating digital technologies, where GE might have later become a leader, not spectator.

GE did not wholly abandon product-innovation during the Welch era, as the company continued making significant incremental innovations in its core industrial businesses of power, aviation, and medical imaging. Nonetheless, complementing GE’s failure to create new businesses in the emerging digital-technology markets, the company seemed to deemphasize product innovation generally––apparently carried away by the giddy excitement of rapidly accelerating financial services. Some observers have agreed; for example, Rachel Silverman in the WSJ writes in 2002:

In recent decades, much of GE’s growth has been driven by units such as its NBC-TV network and GE Capital, its financial-services arm; the multi-industrial titan had shifted focus toward short-term technology research…Some GE scientists had been focusing on shorter-term, customer-based projects, such as developing a washing machine that spins more water out of clothes. That irked some of the center’s science and engineering Ph.D.’s, who thought they were spending too much time fixing turbines or tweaking dishwashers. Some felt frustrated by the lack of time to pursue broader, less immediate kinds of scientific research…”Science was a dirty word for a while,” says Anil Duggal, a project leader…

Steve Lohr writes in 2010 in the NY Times:

Mr. Immelt candidly admits that G.E. was seduced by GE Capital’s financial promise––the lure of rapid-fire money-making unencumbered by the long-range planning, costs and headaches that go into producing heavy-duty material goods. Other industrial corporations were enthralled with finance, of course, but none as much as G.E., which became the nation’s largest nonbank financial company.

James Surowiecki writes in The New Yorker in 2015:

In the course of Welch’s tenure, G.E.’s in-house R. & D. spending fell as a percentage of sales by nearly half. Vijay Govindarajan, a management professor at Dartmouth…told me that “financial engineering became the big thing, and industrial engineering became secondary.” This was symptomatic of what was happening across corporate America: as Mark Muro, a fellow at the Brookings Institution, put it to me, “The distended shape of G. E. really reflected twenty-five years of financialization and a corporate model that hobbled companies’ ability to make investments in capital equipment and R. & D.”

Later still, USC’s Gerard Tellis in Morning Consult in 2019 argues in retrospect that “GE rose by exploiting radical innovations,” adding that:

The real difference between GE on the one hand and Apple and Amazon on the other is not industry but radical innovation.GE focused on incremental innovations in its current portfolio of technologies. Apple and Amazon embraced radical innovations, each of which opened new markets or transformed current ones. [Managers should:] Focus on the future rather than the past; target transparent innovation-driven growth rather than manipulate cash; and strive for radical innovations rather than staying immersed in incremental innovations.

So, GE’s product-innovation capabilities decayed somewhat under Welch. Still. the hybrid strategy was undeniably effective, so we can speculate about whether GE might have found a better way to use it. Perhaps GE could have still leveraged the product-businesses’ strengths to grow GEC, but not deemphasize those businesses’ delivery of superior-value via customer focused, science based, synergistic product-innovation.

Yet, a revised hybrid-strategy would have likely still struggled. It would have presented the same financial risks that GE badly underestimated. Its focus on financial services––so different from the product-businesses––would have still created strategic incoherence for GE. Probably most crucial, such a revised strategy would have required a robust, renewed embrace of the above fundamental product-innovation principles that GE had developed and applied in its first century––so different from the GE that evolved under Welch.

This decay in product-innovation capabilities did not prevent the company from achieving its stellar financial results through 2000. However, GE could find itself in a dead-end should the hybrid strategy falter after 2000––which soon enough it did.

Dead-end finale for GE’s unsustainable triumph––2001-2009